No products in the cart.

Natural Language Processing II

Prerequisites

- basic knowledge of programing in Python

- high school level of mathematics

- Basics of machine learning on the level of our course Introduction to machine Learning

- Knowledge on the level of our basic Natural Language Processing course

Abstract

In this course, we will follow up the basic course on Natural Language Processing with advanced topics. We will mainly focus on text data preprocessing and state-of-the-art applications of deep learning in NLP. We will particularly work with so-called Transformers. By using the transfer learning technique we will show how to exploit large pre-trained neural networks for various practical applications.

Outline

- Preprocessing

- a few words about encoding, unicode normalization

- traditional tokenization (simple methods, spacy, moses)

- subword tokenization (byte-pair encoding, wordpiece, sentencepiece)

- cleaning (deduplication, boilerplate removal)

- Word embeddings

- universal ideas

- skip-gram implementation

- Machine Translation with RNN

- LSTM and GRU overview

- RNN language translation implementation

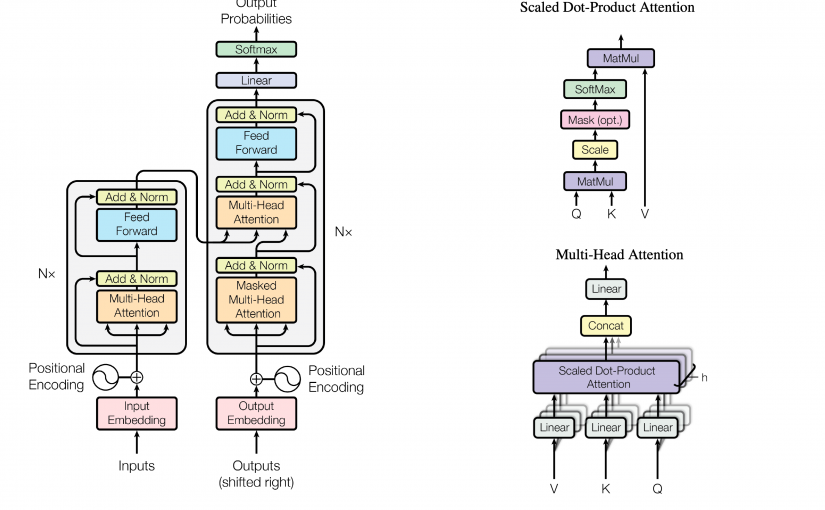

- Transformers

- attention is all you need

- transformer architecture

- GPT3, ChatGPT

- BERT

- XLNET

- Examples of transfer learning in NLP

- text classification

- named entity recognition

- question answering

- language generation, chatbots

Dates

If you wish to enroll in this course please contact us on info@mlcollege.com.