No products in the cart.

Advanced Deep Learning Techniques

Prerequisites

- basic knowledge of programing in Python

- high school level of mathematics

- Basics of machine learning on the level of our course Introduction to machine Learning

Abstract

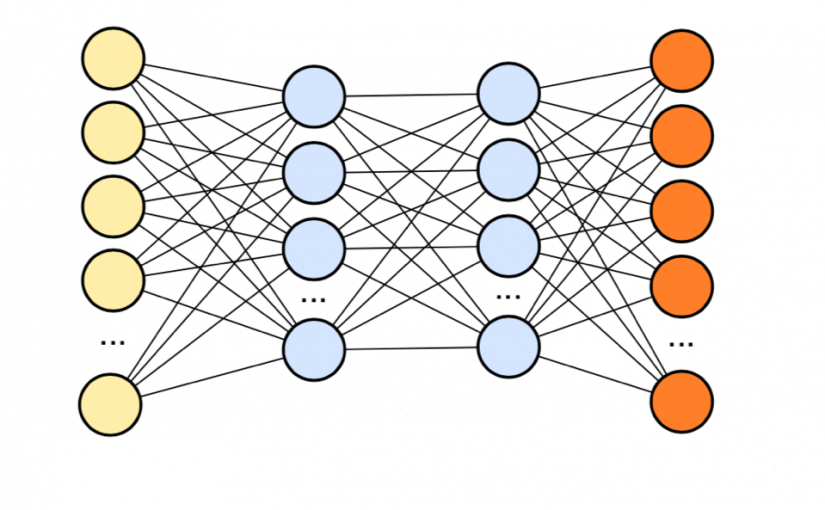

The course is intended for people who are looking for a deeper understanding of artificial neural networks, especially so called deep learning. We will build on the basic knowledge of machine learning principles on the level of our course Introduction to machine learning. We will pay special attention to the topic of machine learning model interpretability and explainability.

Outline:

- Neural network architectures (feed-forward, recurrent, convolutional, generative, autoencoders, Unet, GAN, attention layer)

- Optimizers and their evolution (Steepest Gradient Descent, Stochastic Gradient Descent, Mini-Batch Gradient Descent, Nesterov Accelerated Gradient, Adagrad, AdaDelta, Adam, Learning rate tuning)

- Loss functions and their properties (Mean squared error, Mean absolute error, Negative, Log Likelihood – cross entropy)

- Regularization in Neural Networks (Dropout, Early stopping, Data augmentation, Batch and layer normalization)

- Initialization (Gradient vanishing problem, Zero initialization, He initialization, Xavier initialization)

- Semi-supervised learning (Pseudo Labeling, Mean-Teacher, PI-Model)

- Practical examples of semi-supervised techniques applications

- Confidence estimation (Logit analysis, Confidence networks)

- Practical examples of confidence estimation

- AutoML approaches (Hyper-parameter optimization, grid search, Bayesian optimization, Meta-Learning, Neural network search)

- Practical examples with the AutoKeras

- ML Explainability (Interpretable models, Partial Dependence Plot, Permutation feature importance, Surrogate models, Activation Maximization, Grad CAM)

Dates

If you wish to enroll in this course please contact us on info@mlcollege.com.